Over the last few years the laboratory of neuroscience Jack Gallant wasted a bunch of paper on the study, the findings of which sound absurd.

In 2011, the laboratory has shown that it is possible to play video clips based on monitoring the brain activity of people who watch them. Using a computer to reconstruct the images of film and scanning for that the brain of a person who watches this movie, in a sense, you can do mind reading. Similarly, in 2015, a team of scientists under the leadership of the Gallant were able to determine which famous painting the people whose brain activity was observed doodling in my mind.

This year the team announced in the journal Nature that they have created the Atlas that documented where in the brain are more than 10,000 individual words just by learning how the participants listen to podcasts.

How it all worked? Using machine learning we can say artificial intelligence, which could open a huge treasure trove of information in the brain and to identify patterns of activity that allows to guess what’s going on in our minds.

The goal is to build a machine to read thoughts was not (though they often think). Neuroscientists do not intend to steal passwords directly from the head. Your terrible secrets they, too, are not interested. The real purpose is much grander. Turning neurobiology in the science of big data and using machine learning to explore these data, gallant and his colleagues embarked on the road to possible revolution in our ideas about the brain.

After all, the human brain is the most complex object known in the universe and we barely know him. Crazy idea lab Gallant — an idea that could help neurobiology grow out of childhood and stand on his feet — is this: maybe we should create a special machine that will tell us our own brain. The hope is that if we can decipher the incredibly intricate patterns of brain activity, then we can understand how to reverse the effects of his illness.

Functional MRI is the main tool of the study and analysis of brain function and anatomy — was invented only in the 90s and it gives us only a very approximate picture.

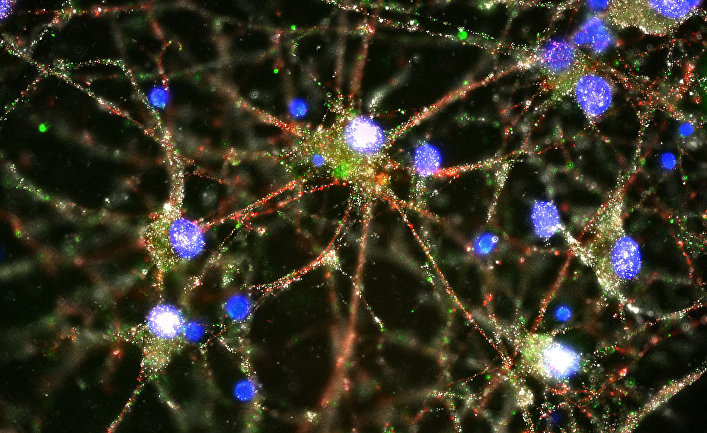

© AP Photo, Heather de Rivera/McCarroll Lab/Harvard via Amaroni brain

© AP Photo, Heather de Rivera/McCarroll Lab/Harvard via Amaroni brain

For comparison: the smallest unit of brain activity, which can lock the fMRI is the so — called voxel. Typically, these voxels is slightly less than the cube with sides of one millimeter. And one voxel can be 100 000 neurons. As I explained neuroscientist at the University of Texas Yarkoni Tal (Tal Yarkoni), fMRI “recalls flying over the city in search of lighted lamps”.

Pictures of traditional fMRI can show where there are vast areas associated with behavior, for example, where we are born negative emotions or what areas aktiviziruyutsya when we see a familiar face.

But we don’t know what kind of role or that the area plays in our behaviour, and whether crucial also less active zone. Our brain is not a set of “LEGO” where each part has a specific purpose. This whole system of activities. “Each area of the brain has a 50% chance to be associated with any other region,” says gallant.

Therefore, simple experiments to search in the brain, for example, “hunger zone” or “zone of attention” in fact can not give a satisfactory result.

“The last 15 years, we looked at the pockets of activity and thinking that this is all the information here — you pockets”, told me in July, Peter Bandettini, head of Department of methodology of fMRI at the National Institute of mental health. “But it turns out that every nuance of every and, the slightest change in its oscillation contains information on the activities of the brain, we haven’t even gotten there yet. That’s why we need those machine learning technology. Our eyes see these pockets, but do not distinguish their structure. The structure is too complicated.”

Here is an example. The traditional view of how the brain uses language is that the process occurs in the left hemisphere and two special areas — the Broca’s area and Wernicke’s area are centers of linguistic activity. If these zones will be corrupted, you become unable to linguistic activities.

But Alex Huth (Alex Huth), postdoctoral fellow Gallant Lab has recently shown that this understanding is too simplistic. The Hutu wanted to know whether to use the language of the whole brain.

In one experiment, some participants listened to a two hour podcast, which tells the story called “Moth”, while he and his colleagues recorded their brain activity using fMRI scanners. The goal was to establish a link between activity of specific brain areas and listening for specific words.

It gave a huge amount of information, more than any man can handle, said gallant. But a computer program trained to find patterns that can identify. And with the help of the program, which was created by the Hutus, it was possible to draw the Atlas, showing where a particular word “lives” in the brain.

“Alex’s research has shown that a huge part of the brain involved in semantic interpretation,” says gallant. In addition, he showed that words with related meanings, like “poodle” and “dog” reside in the brain next to each other.

So what is the value of such a project? In science prediction means power. If scientists explain how stunning a flurry of brain activity reflects the linguistic activity, they will be able to build a better model of the brain. But if they create a working model, we can better understand what happens when variables are changed — when the brain is unhealthy.

What is machine learning?

“Machine learning” is a broad term that covers a huge array of software. In the field of household technologies machine learning is developing by leaps and bounds — for example, learning to identify, that is, to “see” objects, say, a photo of almost human. Using the technology of machine learning called “deep learning”, Google’s Translate service has evolved from a rudimentary translation tool (often giving very funny results) to software that can translate Hemingway into a dozen languages and give a style that can compete with the pros.

But at a basic level, the software machine learning is looking for patterns: what is the probability that the variable X is correlated with Y.

© AP Photo, KEYSTONE/Salvatore Di NolfiДоктор conducting MRI study in a laboratory in Switzerland

© AP Photo, KEYSTONE/Salvatore Di NolfiДоктор conducting MRI study in a laboratory in Switzerland

Typically programs in machine learning in the primary need “training” on a particular data set. The more training data, the smarter it usually becomes a mechanism. After workout programs machine learning an entirely new datasets with which they had not previously encountered. And based on these new datasets, they can start to make predictions.

A simple and good example of your anti-spam filter. A self-learning program scanned a sufficient amount of “junk” messages, studied the language patterns they contain, and can now recognize the spam in the received email.

Machine learning can be used in very simple programs that simply compute the mathematical regression (Remember math classes in high school? Hint: it’s about how to find the slope of the line that describes the ratio of several points in the graph). Or could it be that Google DeepMind, gathering millions of sets of values, which has helped Google to create a computer that beat human at go, the game is so complex that its Board and figures make possible a larger number of configurations than the number of atoms in the universe.

Neuroscientists use machine learning for several different purposes. Here are two main encoding and decoding.

“Coding” implies that the program machine learning tries to predict which pattern of brain activity would cause a certain stimulus.

“Decoding” is the inverse process: according to the available information about the active areas of the brain need to determine what you’re looking at the participants of the experiment.

(Note: neuroscientists use machine learning in conjunction with other scanners of brain activity such as EEG and MEG in addition to fMRI).

Cool Bryce (Brice Kuhl), a neuroscientist the University of Oregon recently used the decoding to reconstruct the faces looked participant, based only on the fMRI data.

Region of the brain that are cool recorded on MRI scans, have long been known to those associated with vivid memories. “Is this area with specific characteristics that you saw or simply [aktiviziruyutsya] due to the fact that you have clear memories?” asks cool. Judging by the fact that the program machine learning defines the features of the face, based on the brain activity in this region, we can assume that this area is “live” and information about the “seen details”.

Top row is real faces used in the experiment the Kula. Two lower rows — matched on the basis of the activity of two different areas of the brain. The reconstruction is far from perfect, but they reflect the main characteristics of the original gender, skin tone, and a smile managed to fix. Image from The Journal of Neuroscience.

In a similar manner the Gallant experiment to determine what kind of artwork I think participant, revealed another little secret of the brain we activate the same areas of the brain when remembering visual information, and when you actually perceive it.

Those neuroscientists with whom I talked, said that while machine learning has not produced in their region a special revolution. The main reason that they do not have enough data. Brain scanning is very time consuming and expensive, so the experiments usually involved several dozens of participants, not a few thousand.

“In the’ 90s brain imaging first came out, people were looking for the difference in perception of different categories of phenomena — which part of the brain aktiviziruyutsya in the perception of individuals, and which — in the perception of houses or tools, it was a large-scale task,” says avnel was human (Avniel Ghuman), the researcher neurodynamics at the University of Pittsburgh. “Now we can ask more specific questions. For example, says whether a person is now about the same, what you were thinking 10 minutes ago?”

This progress “is evolutionary rather than revolutionary,” he says.

Neuroscientists hope that machine learning will help to diagnose and treat mental disorders

Today psychiatrists can’t just examine the patient using fMRI to determine whether he suffers from a mental disorder like schizophrenia, only information about the activity of the brain. They are forced to rely on clinical interviews with the patient (who, no doubt, are of great importance). But software diagnostic approach would allow to distinguish one disease from another that would affect the choice of treatment. To achieve this, according to Bandettini from the National Institute of mental health, neuroscientists need access to huge databases on the foci of brain activity based on fMRI scans consisting of 10 000 subjects.

Program machine learning could explore these datasets in the search for signal patterns of mental disorders. “And then we can go back to the beginning and use it all more clinical to scan the person and say: “On the basis of the biomarker, selected through a database generated from the value of 10 000 people, we can make a diagnosis of, say schizophrenia”,” explains Bandettini. Work in this direction is still preliminary and efforts have not produced significant results.

But with sufficient understanding of interaction between the brain, could be “planning more and more complex ways of intervention to correct the brain function, if she suddenly went wrong,” says Dan Yamins (Dan Yamins), computational neuroscientist at the Massachusetts Institute of technology. “For example, we may be able to implant some kind of implant that is somehow correct Alzheimer’s or Parkinson’s”.

Machine learning could also help psychiatrists to determine in advance how the brain of a particular patient will respond to specific drug treatment of depression. “Today, psychiatrists are forced to guess only, what medicine is likely to be effective from a diagnostic point of view,” says AMIS. “Because the information that is given the symptoms, does not provide a sufficiently accurate picture of what is happening in your brain”.

He stressed that in the near future this will not happen. But scientists have begun to reflect on these issues. The journal NeuroImage has developed a whole discussion dedicated to research in the study of individual differences in the brain and the diagnosis based solely on neuroimaging data.

It’s important work. Because health this transcript will offer new ways of treatment and prevention.

Machine learning could predict epileptic seizures

Patients with epilepsy never know when to expect paralyzing attack. “It really affects the quality of life — for example, you can’t drive a car, it’s a burden you don’t participate in all spheres of daily life, as could”, — says the Christian Meisel (Meisel Christian), a neuroscientist, fellow of the National institutes of health USA, “the Ideal would be to have some kind of warning system”.

And methods for the treatment of epilepsy is far from perfect. Some patients taking anticonvulsant medications 24 hours a day, 7 days a week, but they have serious side effects. But 20-30% of patients no drugs just do not work.

Forecasting could change the rules of the game.

If epileptics knew that coming seizure, they managed at least to find a safe place. Forecasting might as well wag for methods of treatment: prevention would give a signal to the device that injects fast-acting antiepileptic drug, or sends an electrical signal, which prevents the development of seizures.

The Meisel showed me the EEG — electroencephalogram — one epilepsy patients. “At this point, the seizure was not” — says Meisel, “the Question is, is reflected here, the brain activity for an hour or more than four hours before the seizure”?

For the Clinician it would be very difficult to predict “if not impossible”, he says.

But the information about an impending seizure can be hidden in this maelstrom of activity. To test this possibility, laboratory of Meisel recently participated in a competition organized by the scientific online community Kaggle. Kaggle provided the electroencephalogram of three epilepsy patients who have recorded for several years. The Meisel used machine learning to analyze data and find patterns.

How well can be predicted by EEG seizures with the aim to prepare for them? “If we assume that we have a perfect system that can predict everything, may it be 1 point,” — says Meisel, “And if the system makes a prediction by chance, no more reliable than tossing a coin, is 0.5 points. We are now at 0.8. This means that we do not make perfect predictions. But our forecasts are much more reliable than random guesses”. (It sounds great, but in reality this approach is related rather to theory than to practice. EEG studied patients was intracranial, and that invasive procedure).

The Meisel engaged in theoretical neuroscience, by developing models in which epileptic seizures are growing from small pockets of neutral activity in the comprehensive rough storm. He says that machine learning is becoming a useful tool that will help him to develop his theory. He can turn this theory into a model with machine learning and see whether the system to make more reliable or less reliable forecasts. “If this works, then my theory is correct,” he says.

To machine learning has yielded a truly significant results, neuroscience needs to become a science with huge data base

Machine learning will not solve all the major problems of neurobiology. It is limited to the quality of information coming from fMRI scans and other brain scans. (Note that fMRI yields only a blurred image of the brain.) “Even if we have an infinite number of images, we still wouldn’t be able to make perfect forecasts, as the current visualization methods are very imperfect,” says Gail Meroko (Gael Varoquaux), a specialist in computer systems, which turned machine learning into a tool for the work of a neurobiologist.

In any case, all the neuroscientists with whom I talked, admired machine learning at least because it makes the science more accurate. Machine learning copes with the so-called “multiple comparisons problem”, so that scientists can catch the statistically significant results in the extracted data (subject to the availability of a sufficient number of scans of the brain, where “glowing” in some region). Thanks to machine learning, you know, your forecast is correct regarding the reaction of the brain or not. “The ability to predict,” says Meroko, — “gives you control”.

Approach big data also implies that neuroscientists can begin the study of the brain outside of the laboratory. “All of our traditional models to study the brain activity based on very specific artificial conditions of the experiment,” the human says, “We’re not quite sure what all will happen the same way in real life.” If we had enough data on brain activity (e.g., supply mobile scanners EEG) and the response of the brain, the programs of machine learning could start looking for patterns, linking the two of these areas, without the need for contrived experiments.

There is another use of machine learning in neuroscience, and it sounds fantastic: we can use machine learning of the brain to create an even better program machine learning. The greatest progress in machine learning over the last ten years has been the so-called idea of “convolutional neural networks”. It uses Google to recognize objects in photographs. These neural networks are based on theories of neurobiology. So if machine learning can help programs to better understand the structure of the brain, they may find new techniques for yourself and become smarter. Then the the enhanced machine learning can be brought back to further the study of the brain and learn more in the field of neuroscience.

(Researchers can also use data obtained through machine learning programs, designed for visual reproduction of human behavior. Perhaps studying human behavior, the program is able to repeat and how the brain in reality does it.)

“I don’t want people to think that we are now suddenly invent a device for reading minds, it’s not,” says Meroko, “We expect to develop computational models with more features to better understand the brain. And I think we succeed”.